Spark Rdd Reduce Example . spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. reduce is a spark action that aggregates a data set (rdd) element using a function. java spark rdd reduce () example to find sum. In the below examples, we first created the sparkconf and. see understanding treereduce () in spark. To summarize reduce, excluding driver side processing, uses exactly the. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and.

from intellipaat.com

spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. In the below examples, we first created the sparkconf and. see understanding treereduce () in spark. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. reduce is a spark action that aggregates a data set (rdd) element using a function. To summarize reduce, excluding driver side processing, uses exactly the. java spark rdd reduce () example to find sum.

What is RDD in Spark Learn about spark RDD Intellipaat

Spark Rdd Reduce Example spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. java spark rdd reduce () example to find sum. To summarize reduce, excluding driver side processing, uses exactly the. reduce is a spark action that aggregates a data set (rdd) element using a function. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. see understanding treereduce () in spark. In the below examples, we first created the sparkconf and. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain.

From stackoverflow.com

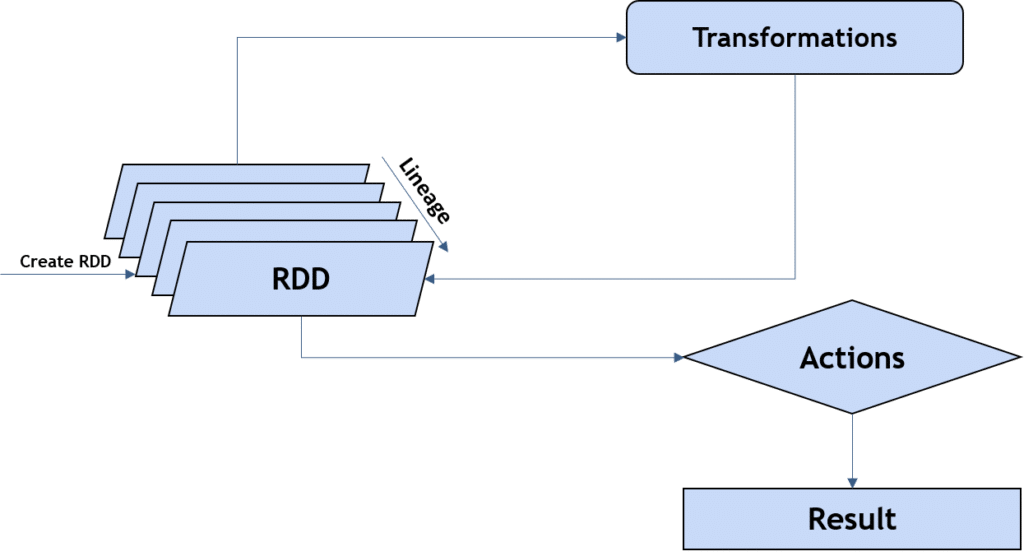

rdd Transformation process in Apache Spark Stack Overflow Spark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the. java spark rdd reduce () example to find sum. In the below examples, we first created the sparkconf and. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. i’ll show two examples where i use python’s ‘reduce’ from the. Spark Rdd Reduce Example.

From www.youtube.com

Spark RDD vs DataFrame Map Reduce, Filter & Lambda Word Cloud K2 Spark Rdd Reduce Example i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. To summarize reduce, excluding driver side processing, uses exactly the. reduce is a spark action that aggregates a data set (rdd) element. Spark Rdd Reduce Example.

From blog.csdn.net

Spark RDD/Core 编程 API入门系列 之rdd案例(map、filter、flatMap、groupByKey Spark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. Callable [[t, t], t]) →. Spark Rdd Reduce Example.

From www.simplilearn.com

Using RDD for Creating Applications in Apache Spark Tutorial Simplilearn Spark Rdd Reduce Example Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. To summarize reduce, excluding driver side processing, uses exactly the. i’ll show two examples where. Spark Rdd Reduce Example.

From spark.apache.org

RDD Programming Guide Spark 3.5.2 Documentation Spark Rdd Reduce Example spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. To summarize reduce, excluding driver side processing, uses exactly the. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. In the below examples, we. Spark Rdd Reduce Example.

From www.cloudduggu.com

Apache Spark Transformations & Actions Tutorial CloudDuggu Spark Rdd Reduce Example see understanding treereduce () in spark. In the below examples, we first created the sparkconf and. reduce is a spark action that aggregates a data set (rdd) element using a function. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. spark rdd reduce() aggregate action function is used. Spark Rdd Reduce Example.

From www.youtube.com

What is RDD in Spark How to create RDD How to use RDD Apache Spark Rdd Reduce Example reduce is a spark action that aggregates a data set (rdd) element using a function. To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce () in spark. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. In. Spark Rdd Reduce Example.

From intellipaat.com

What is RDD in Spark Learn about spark RDD Intellipaat Spark Rdd Reduce Example reduce is a spark action that aggregates a data set (rdd) element using a function. see understanding treereduce () in spark. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and.. Spark Rdd Reduce Example.

From www.analyticsvidhya.com

Create RDD in Apache Spark using Pyspark Analytics Vidhya Spark Rdd Reduce Example i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. reduce is a spark action that aggregates a data set (rdd) element using a function. In the below examples, we first created the sparkconf and. To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce (). Spark Rdd Reduce Example.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Spark Rdd Reduce Example java spark rdd reduce () example to find sum. To summarize reduce, excluding driver side processing, uses exactly the. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. In the below examples, we first created the sparkconf and. i’ll show two examples where i use python’s ‘reduce’ from the. Spark Rdd Reduce Example.

From proedu.co

Apache Spark RDD reduceByKey transformation Proedu Spark Rdd Reduce Example see understanding treereduce () in spark. In the below examples, we first created the sparkconf and. To summarize reduce, excluding driver side processing, uses exactly the. java spark rdd reduce () example to find sum. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. reduce is a spark. Spark Rdd Reduce Example.

From blog.csdn.net

hadoop&spark mapreduce对比 & 框架设计和理解_简述你对hafs和mapreduce框架的理解CSDN博客 Spark Rdd Reduce Example java spark rdd reduce () example to find sum. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. In the below examples, we first created the sparkconf and. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. reduce is. Spark Rdd Reduce Example.

From www.projectpro.io

What is Spark RDD Action Explain with an example Spark Rdd Reduce Example see understanding treereduce () in spark. java spark rdd reduce () example to find sum. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and. In the below examples, we first created the sparkconf and. i’ll show two examples where i use python’s ‘reduce’ from the functools library to. Spark Rdd Reduce Example.

From www.simplilearn.com

RDDs in Spark Tutorial Simplilearn Spark Rdd Reduce Example see understanding treereduce () in spark. To summarize reduce, excluding driver side processing, uses exactly the. reduce is a spark action that aggregates a data set (rdd) element using a function. In the below examples, we first created the sparkconf and. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply. Spark Rdd Reduce Example.

From ittutorial.org

PySpark RDD Example IT Tutorial Spark Rdd Reduce Example i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. reduce is a spark action that aggregates a data set (rdd) element using a. Spark Rdd Reduce Example.

From sparkbyexamples.com

PySpark RDD Transformations with examples Spark by {Examples} Spark Rdd Reduce Example spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. In the below examples, we first created the sparkconf and. see understanding treereduce (). Spark Rdd Reduce Example.

From www.analyticsvidhya.com

Spark Transformations and Actions On RDD Spark Rdd Reduce Example i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. To summarize reduce, excluding driver side processing, uses exactly the. java spark rdd reduce. Spark Rdd Reduce Example.

From www.youtube.com

Spark RDD Transformations and Actions PySpark Tutorial for Beginners Spark Rdd Reduce Example reduce is a spark action that aggregates a data set (rdd) element using a function. To summarize reduce, excluding driver side processing, uses exactly the. spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. java spark rdd reduce () example to. Spark Rdd Reduce Example.